1.2 Logistic Regression as a Neural Network

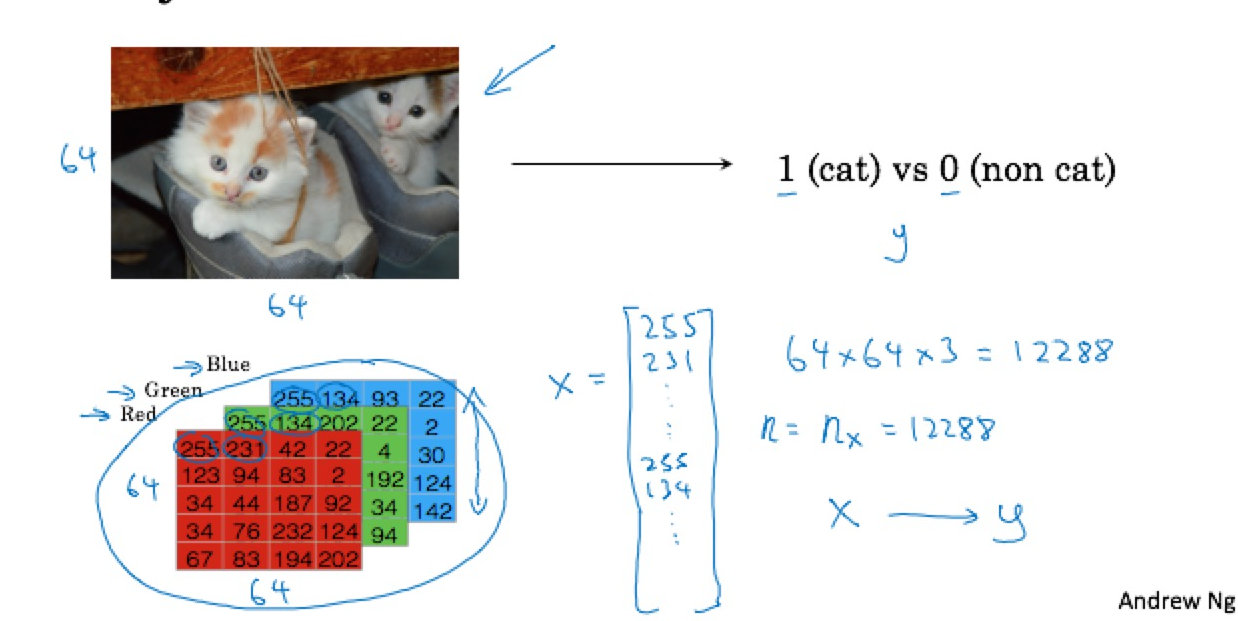

1.2.1 Binary Classification

Binary classification is the task of classifying the elements of a given set into two groups (predicting which group each one belongs to) on the basis of a classification rule.

摘自 wikipedia

2. Logistic Regression

In statistical modeling, regression analysis is a set of statistical processes for estimating the relationships among variables. -- wikipedia

Logistic regression is a learning algorithm used in a supervised learning problem when the output 𝑦 are all either zero or one. The goal of logistic regression is to minimize the error between its predictions and training data. -- Andrew NG

In linear regression we tried to predict the value of for the ‘th example using a linear function . This is clearly not a great solution for predicting binary-valued labels . In logistic regression we use a different hypothesis class to try to predict the probability that a given example belongs to the “1” class versus the probability that it belongs to the “0” class.

总结:

is a regression model where the dependent variable (DV) is categorical.

一般指case of a binary dependent variable where the output can take only two values, "0" and "1".Cases where the dependent variable has more than two outcome categories may be analysed in multinomial logistic regression(known by softmax regression).

uses the logistic function(sigmoid function).

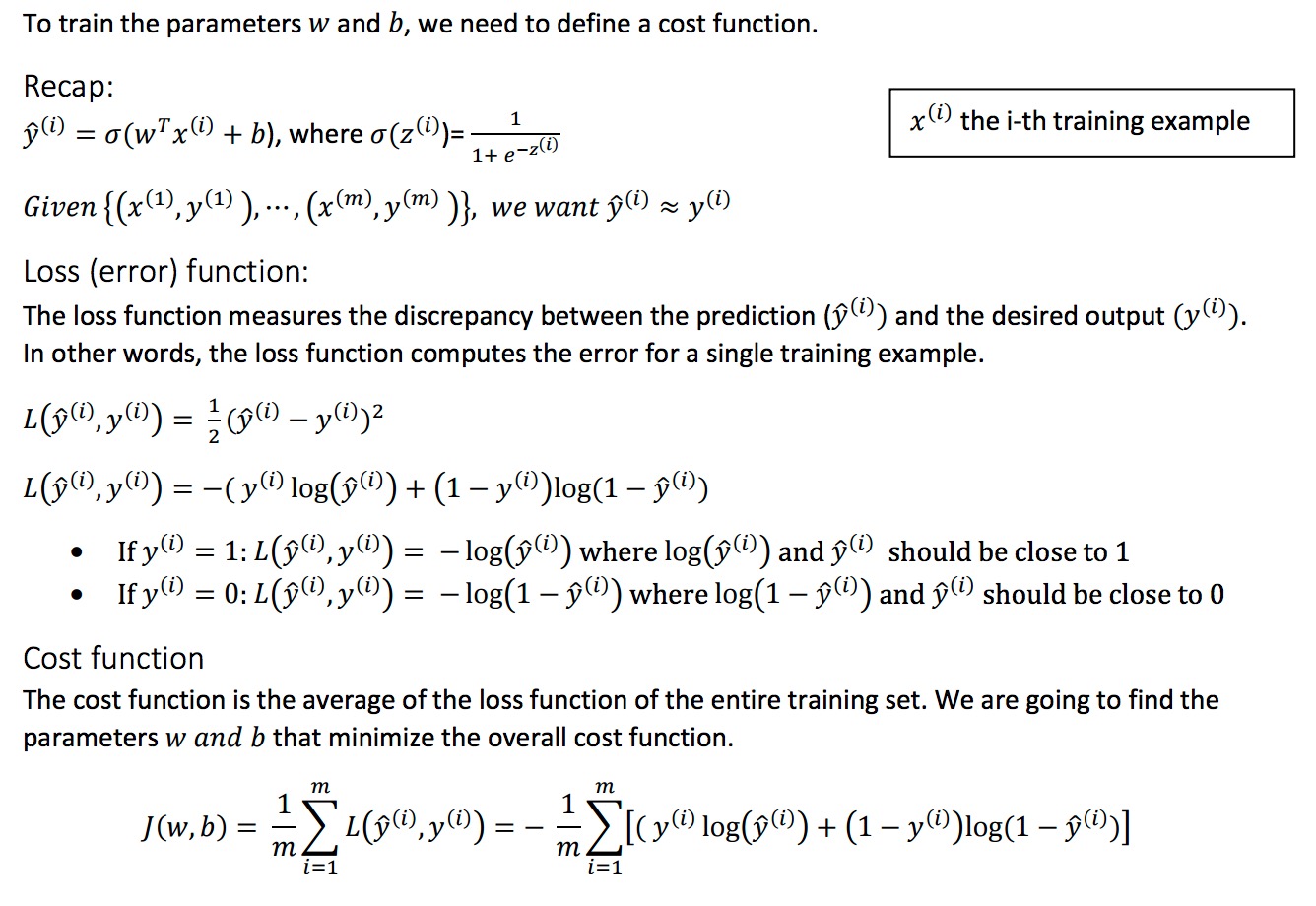

3. Logistic Regression Cost Function

补充:

- 不能用squared error 做loss (error) function,不然optimization problem will becomes not convex,So you end up with optimization problem with multiple local optima. So gradient descent may not find the global optimum

4. Gradient Descent

Gradient descent is a first-order iterative optimization algorithm for finding the minimum of a function. -- wikipedia

# Vanilla version of Gradient Descent

## This simple loop is the core idea of Gradient Decent.

while True:

weights_grad = evaluate_gradient(loss_fun, data, weights)

weights += - step_size * weights_grad # perform parameter update

Intuition for Gradient Descent:

A random position on the surface of the bowl is the cost of the current values of the weights (cost).

The bottom of the bowl is the cost of the best set of weights, the minimum of the function.

The goal is to continue to try different values for the weights, evaluate their cost and select new weights that have a slightly better (lower) cost.

Repeating this process enough times will lead to the bottom of the bowl and you will know the values of the weights that result in the minimum cost.

5. Derivatives

{

: weights, : i'th input(example), : bias

}

then

[ this used: , the 1's in numerator cancel, then we used: ]

cost function is the form of:

Plugging (2) and (3) into (4), we obtain:

simplified to:

where the second equality follows from:

[ we used ]

All you need now is to compute the partial derivatives of (5). As